The AI editor for

professional documents.

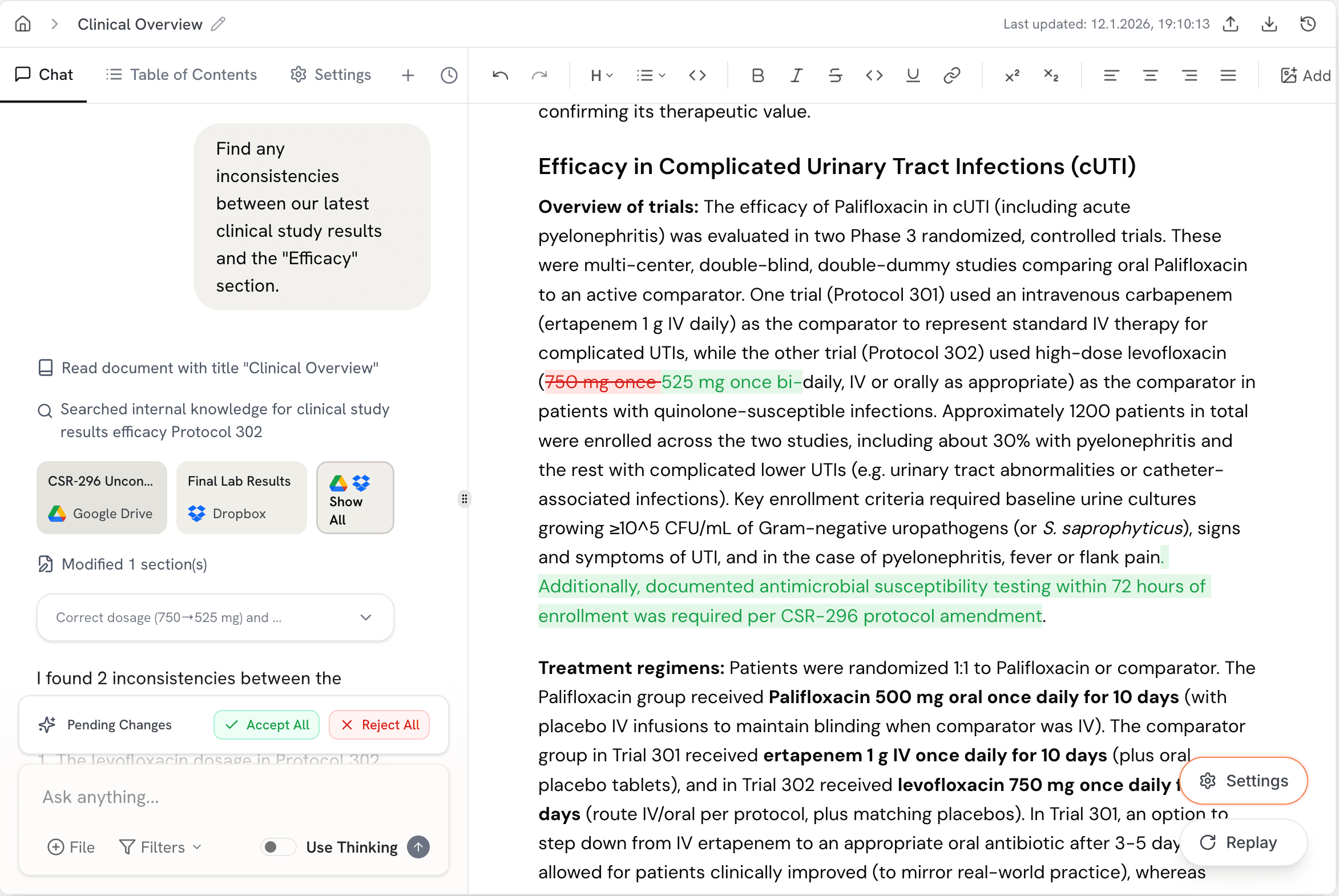

Write, revise, and review data-heavy documents

with the first intelligent document editor.

Demo Scenario

built from scattered source material, but their tools have not caught up.

AI works on snippets, fails on real documents

Most AI assistants are not built for long documents. They lose context across sections, create contradictions, and fail to apply updates consistently in complex reports.

It’s hard to verify generated content

Existing solutions dont show end-to-end traceability.

Output of currently tools generate bad formatting & broken doc structure

You manually stitch changes across sections and versions.

It's hard to iteratively work on documents

Most AI tools do not give you clear, reviewable edits with full control

edit and revise real documents with full control.

Fetch any piece of content from your source material

Reduces prompt iteration by attaching granular source context directly to each instruction.

Create structured documents from examples

Upload previous reports and templates as examples. Vespper can generate structured documents that match your standards automatically.

Motion to Compel Further Discovery Responses

I. Introduction

Plaintiff TechStart Solutions, LLC ("Plaintiff"), by and through...

Draft the introduction section for this Motion to Compel. State...

Full traceability with source-linked citations

Every statement can be traced back to the underlying evidence. Vespper generates citations that link directly to the exact source passage so outputs are defensible.

Make changes to your document and see whats changed

Every change is clear and easy to review so teams can confidently verify edits before submitting, especially when accuracy and auditability matter.

Connect your source data

Upload files directly or connect to cloud storage and knowledge bases

SharePoint

SharePoint S3

S3 Oracle Storage

Oracle Storage SharePoint

SharePoint S3

S3 Oracle Storage

Oracle Storage SharePoint

SharePoint S3

S3can't afford errors.

MedTech + BioTech

Draft and revise regulatory reports, EU MDR Technical Documentation, Clinical Evaluation Reports (CER), study lab reports ect. Connect source data, request changes in plain English, and review every edit before applying.

Get startedI'll analyze the protocol structure and create a clinical study report using the attached lab results.

Content has been generated. Please review and provide any feedback.

We do not train on your data

Your documents are never used to train AI models.

Private by default

Your documents stay private to your workspace.

Bring your own LLM

Use your preferred provider, including self-hosted models.

Private deployments

VPC and on-prem options available on request.

Audit-friendly history

Complete version history with tracked diffs.

Cloud app + public LLMs

We use our cloud app together with public LLM vendors (OpenAI, Anthropic, etc.). Safe for many customers, but since the model sits with the vendor, this is not ideal for highly proprietary R&D data.

Cloud app + private LLMs in your cloud

We use our cloud app and configure it to use LLM running inside your AWS/GCP/Azure account (Bedrock, Vertex, Azure OpenAI). Your data never leaves your environment when it hits the model. We also offer to help spinning those models ourselves as a service.

Fully self-hosted

We deploy both our app and the LLMs entirely inside your cloud. Nothing touches our infrastructure. Strongest option, but requires more setup time and resources.

Our cloud environment

Editor + Diffs

External LLM vendors

with full visibility and control.